Abstract

This article explains transaural crosstalk caused when two speakers are placed close together and sounds intended for one ear reach the other ear. The article shows how phase delay can be used to produce a 3D effect to produce acoustical signals at the two ears as the signals would occur in a normal listening situation. The MAX9775 headphone amplifier is given as an example device.

A similar article appeared on the Audio DesignLine, August 2006.

Introduction

Stereophonic sound is usually achieved only with a considerable amount of separation between the speakers. However, many applications such as handheld computers and cellular phones must mount their speakers close together. For those designs, you can simulate stereo by employing wave interference to cancel left-channel sounds in the listener's right ear and right-channel sounds in the left ear. Called transaural crosstalk cancellation, this technique yields an apparent separation between speakers that is at least four times greater than the actual separation.

Theory

To better understand this phenomenon, consider how the ears and brain process information to determine the location of a sound source. The human ear is sensitive to what we call "audible" sound waves in the approximate range 20Hz to 20kHz. As a sound wave reaches the ear, it is shaped by the outer ear before reaching the inner ear drum. This reshaping changes the wave's resonant properties according to the direction from which it entered the ear. The resulting spectrum allows the brain to determine the direction from which the sound wave originated.

As sound waves propagate towards a listener's head from a given direction, any slight difference in arrival times at the left and right ears also helps the listener to determine the direction of the source. This time delay, known as ITD (interaural time delay), operates with the ear's spectral properties to determine the HRTF (head-related transfer function)1 associated with each ear. The HRTF is a mathematical transfer function that relates a specific sound source to the ears of the listener. It accounts for the source location in terms of distance to the listener's head, the separation distance between the ears, and the sound frequency.

The basic idea for simulating a 3D effect is to produce acoustical signals at the two ears as the signals would occur in a normal listening situation. This 3D effect is accomplished by combining each source signal with the pair of HRTFs corresponding to the direction of the source.2

Multimedia 3D Enhancement

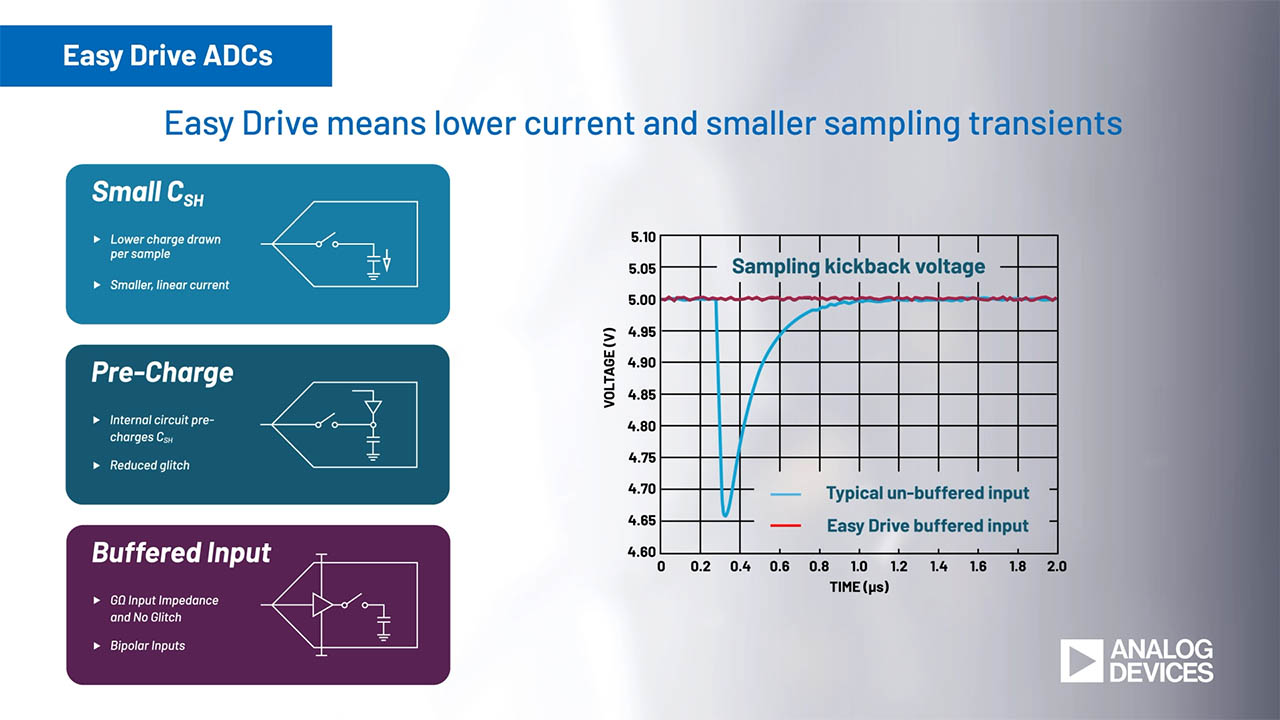

Most stereo multimedia products that offer 3D enhancement do not account for all the directional information needed to create a fully 3-dimensional sound. Those multimedia systems in most cases employ HRTFs consisting of simple phase-delay circuits which produce a widening effect on the perceived sound field. Thus, speakers located closely together are perceived as being separated by a greater distance.

When listening to sound from a two-speaker source, the left-channel signal arrives at the left ear before it arrives at the right ear, and the right-channel signal arrives at the right ear before it arrives at the left. The right ear, however, hears a little of the left-channel sound, and the left ear hears a little of the right-channel sound. That effect is called transaural acoustic crosstalk (Figure 1).

Figure 1. Transaural acoustic crosstalk consists of some sound from the right-hand stereo speaker reaching the left ear, and vice-versa.

As the speakers are moved closer together, these time delays diminish until sounds from the two speakers appear to come from a single speaker. The resulting crosstalk "tells" our brain that the sources are close together. To simulate a greater separation between two closely located audio sources, this crosstalk must be eliminated. By adding a cancellation signal from each speaker to the other, you can cancel the crosstalk for listeners directly in front of the source. Again, this acoustic cancellation gives listeners the impression of wider speaker separation.3

Phase Delay Cancels Crosstalk

The technique of introducing a phase delay to each of the signals driving multiple emitters is commonly used in radio-antenna arrays to control their beamwidth and directivity. Whereas a single antenna aligned along the  direction radiates equally in all directions along the x–y plane, that spread of radiation can be limited to several distinct lobes along the x–y plane by lining up several transmitting antennas. For a given distance between the antennas, the lobe width decreases with the number of antennas and with the frequency of the radio waves. For example, a five-element array with zero phase difference between the elements (i.e., all antennas emitting the same signal) produces the typical radiation pattern of Figure 2.

direction radiates equally in all directions along the x–y plane, that spread of radiation can be limited to several distinct lobes along the x–y plane by lining up several transmitting antennas. For a given distance between the antennas, the lobe width decreases with the number of antennas and with the frequency of the radio waves. For example, a five-element array with zero phase difference between the elements (i.e., all antennas emitting the same signal) produces the typical radiation pattern of Figure 2.

Figure 2. A five-element antenna array with zero phase differences between the elements produces this radiation pattern. The antennas are located at the origin and spaced at half-wavelength intervals along the x-axis.

Besides changing the lobe width, you can rotate the main lobe in the x–y plane by delaying the signal to each successive element in the array by a constant phase angle α (Figure 3). The array's radiation pattern is then proportional to an array factor F(u):

Where N is the number of antennas in the array;  is the radiation wave number; d is the separation between antennas; and Ψ is the angle with the positive x-axis.4

is the radiation wave number; d is the separation between antennas; and Ψ is the angle with the positive x-axis.4

Figure 3. These radiation patterns are produced by a five-element antenna array in which the phase difference between elements is π/2 (a), and 2π/3 (b).

Application to Sound

Because sound waves also obey the superposition principle, you can apply these results to the creation of a speaker array which directs the sound from one stereo channel to one ear and the sound from the other channel to the other ear (Figure 4).

Figure 4. In this block diagram of an audio-speaker array for stereo sound, the two buffer amplifiers each add α° of phase delay.

Because each HRTF is specific to the relationship between a given sound source and the listener, we must make some assumptions in deriving the HRTF that cancels transaural acoustic crosstalk for a specific application.

Assuming that the speakers are mounted on a handheld device, the parameter d should be no greater than 7cm. Next, assuming a 20cm head width and a distance of 50cm between the ears and the device, the angles ΨL and ΨR (measured from the positive x axis to the listener's left and right ears, respectively) should be 78.5° and 101.5°. For the condition of no signal on the left and a nonzero signal on the right, the optimal phase difference is one that maximizes sound amplitude in the vicinity of the right ear (Figure 5).

Figure 5. For a signal applied only to the right channel in Figure 4, the ratio of sound amplitudes at the right and left ears is maximum when α = 90°, f = 6.1kHz, and d = 7cm.

Looking back at equation 1, F(u) for a two-element array has a maximum when u = 0 and a minimum when u = π. For a nonzero signal on the right channel, therefore:

Thus, the optimal phase difference is -90°. Using the relationship  :

:

This result is fortunate, because 6.1kHz lies close to the peak of sensitivity for the frequency response of our ears. The constant phase difference causes a performance degradation as the signal departs from this optimal frequency. Nonetheless, the constant-phase technique has been found superior to techniques that feature other phase-frequency relationships, such as linear phase versus frequency.

Circuit Design

Networks that produce a constant phase shift (i.e., a phase difference) find considerable application in radio electronics, and techniques for their design have existed since the 1950s. The basic topology consists of two cascades of first-order allpass stages (Figure 6), which produce a nonconstant phase shift with respect to their common input. Over a specified frequency range, however, they maintain an approximately constant phase shift relative to each other.

Figure 6. A basic first-order allpass circuit.

Passive implementations are available, but the most commonly used first-order section is an active circuit (Figure 7). It presents a phase-shift filter with ft = 10kHz for the linear signal (the proper input channel), and an ft of 1kHz for the quadrature signal (the other input). The goal is a 90° phase shift between the linear and quadrature signals over the audio bandwidth of 1kHz to 10kHz.

Figure 7. Among cascaded, first-order, active allpass circuits, this one is most commonly used.

The cascaded, first-order, allpass circuit of Figure 8 achieves a phase difference between the L and Q outputs of close to 90° throughout the frequency range of 1kHz to 10kHz. The 1kHz to 10kHz range is acceptable, because most of the speakers in portable audio devices are too small to support full-spectrum audio. Typically, they offer very little response below 300Hz.

Figure 8. This response of the Figure 7 circuit shows a reasonable approximation to the desired 90° phase difference over the range 1kHz to 10kHz.

To improve the 3D effect, you can add more stages in cascade and then align them appropriately to widen the frequency range over which the circuit maintains a 90° phase shift. The two-stage cascade shown, however, is a good tradeoff among circuit complexity, power consumption, and performance. Audio ICs such as the MAX9775 incorporate a phase-delay circuit and audio amplifier that simulate a wider perceived sound field with a single chip.